5. TOAR Near Realtime Data Processing

Currently we collect near real-time data from two data providers: UBA (German Environment Agency 1 ) and OpenAQ (open air quality data 2). The corresponding data harvesting procedures are described below.

5.1. UBA Data Harvesting

Since 2001, the German Umweltbundesamt - UBA 1 - provides preliminary data from a growing number (currently 1004) of German surface stations. Basis for the data exchange is the manual „Luftqualitätsdaten- und Informationsaustausch in Deutschland“, Version V 5, April 2019 (in German).

At least ozone, SO2, PM10, PM2.5, NO2 and CO data for the current day are updated daily and provided continuously hourly up to a maximum of four previous days. Data is fetched from the UBA service 4 times per day (8 am,12 pm, 18 pm, and 22 pm (local time)).

The software for processing the data from UBA is available at https://gitlab.version.fz-juelich.de/esde/toar-data/toar-db-data/-/tree/master/toar_v2/harvesting/UBA_NRT.

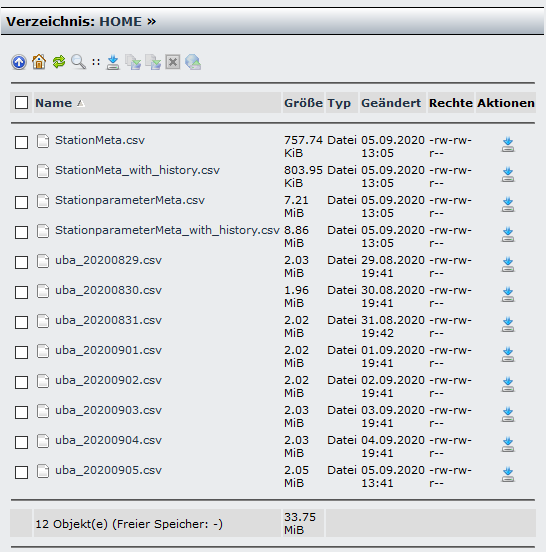

Data (StationparameterMeta.csv, StationMeta.csv, uba_%s.csv (%s denotes a date)) are harvested 4-times daily from http://www.luftdaten.umweltbundesamt.de/files/ (secured with access credentials).

Fig. 5.1 Snapshot from 2020-09-05 17:00 CEST

name of component in original file |

name of component in TOAR database |

|---|---|

Schwefeldioxid |

so2 |

Ozon |

o3 |

Stickstoffdioxid |

no2 |

Stickstoffmonoxid |

no |

Kohlenmonoxid |

co |

Temperatur |

temp |

Windgeschwindigkeit |

wspeed |

Windrichtung |

wdir |

PM10 |

pm10 |

PM2_5 |

pm2p5 |

Relative Feuchte |

relhum |

Benzol |

benzene |

Ethan |

ethane |

Methan |

ch4 |

Propan |

propane |

Toluol |

toluene |

o-Xylol |

oxylene |

mp-Xylol |

mpxylene |

Luftdruck |

press |

term of station_type in original file |

term of station_type in TOAR database |

|---|---|

Hintergrund |

background |

Industrie |

industrial |

Verkehr |

traffic |

term of station_type_of_area in original file |

term of station_type_of_area in TOAR database |

|---|---|

ländlich abgelegen |

rural |

ländliches Gebiet |

rural |

ländlich regional |

rural |

ländlich stadtnah |

rural |

städtisches Gebiet |

urban |

vorstädtisches Gebiet |

suburban |

component |

original unit |

unit in TOAR DB |

unit conversion while ingesting |

|---|---|---|---|

co |

mg m-3 |

ppb |

858.95 |

no |

ug m-3 |

ppb |

0.80182 |

no2 |

ug m-3 |

ppb |

0.52297 |

o3 |

ug m-3 |

ppb |

0.50124 |

so2 |

ug m-3 |

ppb |

0.37555 |

benzene |

ug m-3 |

ppb |

0.30802 |

ethane |

ug m-3 |

ppb |

0.77698 |

ch4 |

ug m-3 |

ppb |

1.49973 |

propane |

ug m-3 |

ppb |

0.52982 |

toluene |

ug m-3 |

ppb |

0.26113 |

oxylene |

ug m-3 |

ppb |

0.22662 |

mpxylene |

ug m-3 |

ppb |

0.22662 |

pm1 |

ug m-3 |

ug m-3 |

|

pm10 |

ug m-3 |

ug m-3 |

|

pm2p5 |

ug m-3 |

ug m-3 |

|

press |

hPa |

hPa |

|

temp |

degree celsius |

degree celsius |

|

wdir |

degree |

degree |

|

wspeed |

m s-1 |

m s-1 |

|

relhum |

% |

% |

Validated data from the previous year is available at May 31st latest. This data is requested by email and then processed from the database dumps we receive. The validated data will supersede the preliminary near realtime data. The realtime data remains in the database but is hidden from the standard user access procedures via the data quality flag settings.

5.2. OpenAQ

OpenAQ 2 is collecting data in 93 different countries from real-time government and research grade sources. Starting on 26th November 2016, OpenAQ has already gathered more than one billion records, which has 306 Gigabyte in total size and covers the air quality relevant variables BC, CO, NO2, O3, PM10, PM2.5 and SO2.

We began working on OpenAQ data about 6 years ago, but this has always been a rather difficult issue due to unsuitable data structures and metadata and unknown data quality. After an unsuccessful first attempt to set up a processing pipeline, we redid much of this work again about 2 years back. We then concentrated on those world regions where OpenAQ would give us the only information about air quality that we could get our hands on. If I am not mistaken (Sabine might know better), we never included all OpenAQ data, for example from Europe. We did not have the time nor capacity to rigorously inspect the OpenAQ time series and always expressed clearly that this data review must be undertaken by the TOAR scientists. However, your observations concerning limited data coverage and oftentimes questionable data quality agrees with our non-systematic analysis.

Footnotes